November 21, 2024

Kevin Montgomery

|

AI services and products are only as good as the information they’re providing. Using Retrieval Augmented Generation (RAG) with Managed Search can help improve AI outputs and trust.

Better Results from AI and LLMs – Building RAG with Managed Search

Advancements in transformer architecture, reinforcement learning and training processes have led to notable improvements in natural language processing (NLP) and text generation with Large Language Models (LLMs). These LLMs are trained on a wide range of source data including website content, social media posts, literature, source code and other highly relevant sources of information. Users can then prompt these large language models with a seed prompt (e.g. “write a blog post about AI”) and the LLM will generate a response from its training data.

Output quality can vary depending on the input prompt and training data. In some cases LLMs may respond with incomplete or incorrect responses, sometimes referred to as “hallucinating.” These outputs, however, can be improved by including relevant source material in the initial prompt to narrow the context from which the LLM will generate a response – augmenting generation with context-specific retrieval.

What is Retrieval Augmented Generation?

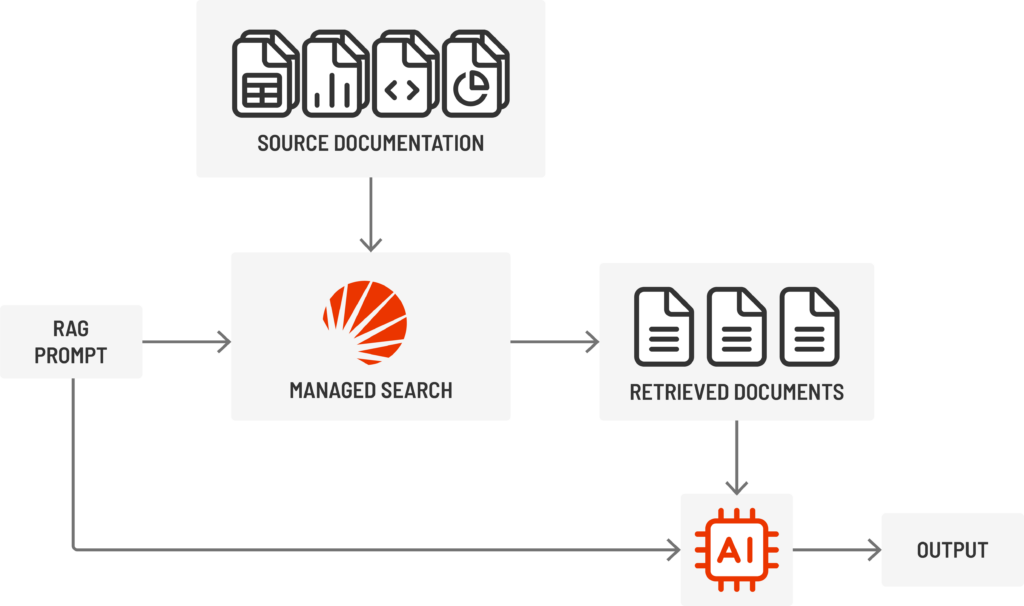

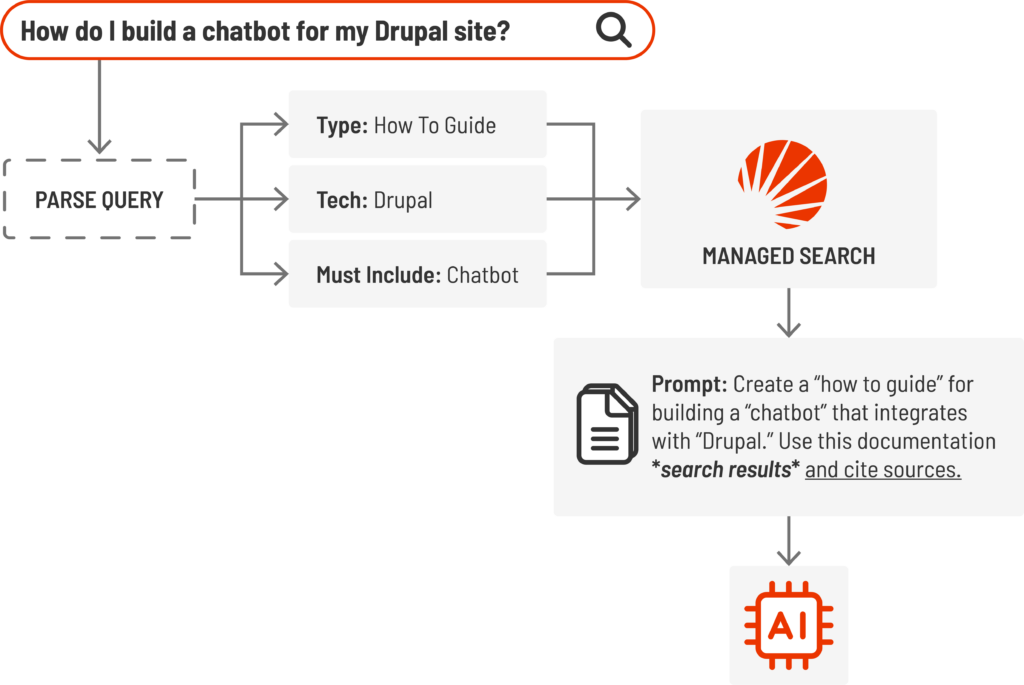

Retrieval Augmented Generation (RAG) is an information retrieval and text generation process that uses search engines to add relevant contextual information from known good sources to a prompt before sending it to an LLM. Keywords and topics are extracted from the source prompt, sent to the search engine and the resulting documents are then appended to the source prompt and sent to the LLM. The LLM can utilize the additional contextual information to generate the final response and cite the specific documents or section of content that was used to generate the response.

In addition to improving response quality in general, RAG can be used for knowledge base search and conversational systems. RAG helps improve prompt response quality while reducing incorrect or incomplete responses. This is especially helpful for chat agents, customer support and other open-ended interfaces. Here’s how:

- Enhanced Knowledge Base Searches: Empower customers and employees with more precise, contextually relevant search results, improving knowledge accessibility.

- Reliable and Relevant Customer Support: Equip chat agents and support teams with accurate, up-to-date information, reducing incorrect or incomplete responses.

- Smart Assistants Optimized for Your Data: Train virtual assistants on internal documentation, enabling them to provide accurate answers specific to your organization.

Why use RAG?

In addition to improving response quality in general, RAG can be used for knowledge base search and conversational systems. RAG helps improve prompt response quality while reducing incorrect or incomplete responses, this is especially helpful for chat agents, customer support, and other open-ended interfaces.

- Improve search and knowledge base for customers and employees

- Relevant and reliable customer service and support agents

- Smart assistants trained on internal documentation

How do I build RAG with Managed Search?

Managed Search is a powerful scalable search platform developed by SearchStax to be easy to manage while still providing the customization and flexibility of a fully-featured search engine. Managed Search environments can be easily provisioned and scaled as needed for development, testing and production-scale search workflows.

Managed Search, built on Solr, provides search-infrastructure-as-a-service with robust APIs for easy integration into your LLM prompt and generation workflows. Once source content has been added to your Managed Search indexes, you can start searching and retrieving relevant documents to feed into LLM prompting systems. Search relevancy and response can be customized and optimized for improved retrieval and better prompt outputs.

Source Content

RAG utilizes a known “source of truth” to reduce instances of hallucinations, incorrect or incomplete responses. Well-written, organized and categorized source content will greatly improve the quality and depth of responses from a RAG system.

- Index as much authoritative original content as is available

- Include relevant meta data such as categorization, tagging, content types, authors and other relevant data that can improve finding source content

- Utilize content structure such as heading, meta data and sections to improve understanding

Managed Search supports large scale high volume content indexing through our APIs and connectors. Search data can be replicated, backed up and restored to minimize data loss while reducing downtime.

Retrieving Source Content

Establishing a limited context from trusted source content (as opposed to using the global context from all training data) provides the biggest increase in quality and relevance to output prompts. Managed Search instances can be easily scaled to handle high query volume and large document sizes typically seen in RAG implementations.

Improving Search Quality and Prompt Output

Improvements to search quality can help establish an authoritative context for LLMs. Retrieval searches can include more than just text-based search. Context-specific relevance models, search facets and filtering, as well as vector similarity and other ranking improvements can all be used for an initial query or during additional prompts to augment and refine retrieval output and final response.

Bring Your Own Model

As RAG processes evolve, some companies offer RAG within their cloud or AI platforms, yet many organizations find custom models and prompts outperform generic services.

Running your own LLM models and training processes typically entails a large amount of data management and throughput as well as cloud and server management for the various services and data storage features to run.

Managed Search instances can be deployed and scaled as needed in the region of choice using your preferred cloud providers. Managed Search includes deployment and monitoring APIs for flexible orchestration and alerting.

Search Infrastructure as a Service for RAG

SearchStax Managed Search is available on major cloud providers in most regions and

can be easily integrated into an existing LLM stack. Features like VPC integration, high availability, backups, and powerful APIs makes search a first-class citizen in your organization without the overhead and uncertainty of running your own infrastructure or dealing with compliance challenges.

You can customize your Managed Search deployment so that your Solr infrastructure can grow and scale with your tech stack while remaining highly available. Managed Search includes support, backups, security scanning and patching, orchestration APIs, as well as VPC support for easy integration in your infrastructure.

SearchStax built Managed Search to provide the power and flexibility of Solr technology with the management, availability and scalability backed by our 24/7 support team, robust fault protection and more. Managed Search provides stable, scalable and predictable environments to develop, test and deploy large scale reliable search as part of your product.

We’re making it easier to build custom search solutions using Solr with predictable infrastructure management, resilience, and cost controls.

Getting Started with Managed Search

Schedule a Demo to learn more about Managed Search and how you can build reliable, scalable search experiences without the headaches of infrastructure management. Talk to our search experts to learn more about scalable search infrastructure management and how Managed Search can help your team build, deliver and optimize Retrieval Augmented Generation built with Managed Search.

Start Now for Free

Try Managed Search for 14 days and start adding reliable search to your RAG pipeline.