Sep. 04, 2018

Karan Jeet Singh

|

At SearchStax, we manage thousands of Apache Solr nodes for our customers around the world. We encounter some very interesting issues and have to be creative about resolving them. One of these was The Case of the Missing Data.

We recently faced an issue where data would disappear from Solr. The deployment, which was on AWS in the US East region, ingested data from Acquia, a third-party Drupal application. Every now and then, all of the data would disappear from a collection. The client had no idea what might have caused this behavior. SearchStax was asked to unravel this mystery.

Apache Solr, out of the box, has a rich set of logging options including command-level logging. However, it does not capture commands that arrive as the payload of an HTTP POST command. We suspected that this unmonitored channel might be the source of the trouble.

Our task was to capture those sneaky payloads. For this purpose, Solr provides an update processor called LogUpdateProcessorFactory.

LogUpdateProcessorFactory lets us keep track of the commands coming into Solr. We added this processor to a chain that is called every time the /update endpoint is triggered. The chain then logs the command (and the payload), and then executes the update.

Let’s look at how to create an update chain.

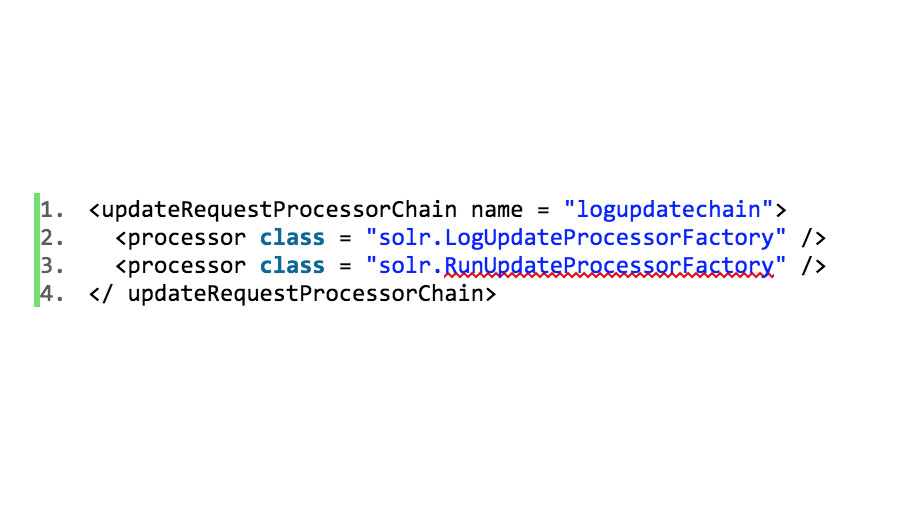

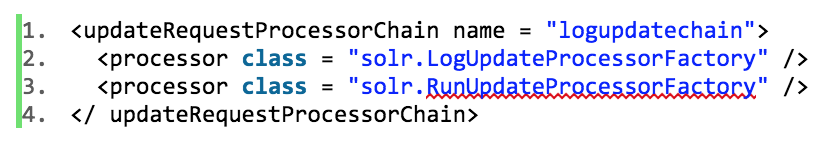

A chain in Solr is just a series of commands that are called whenever the chain is called. For this task we’ll define an updateRequestProcessorChain in the solrconfig.xml file. This calls the LogUpdateProcessorFactory and RunUpdateProcessorFactory to first log the update command and then execute the command. We called the chain logupdatechain.

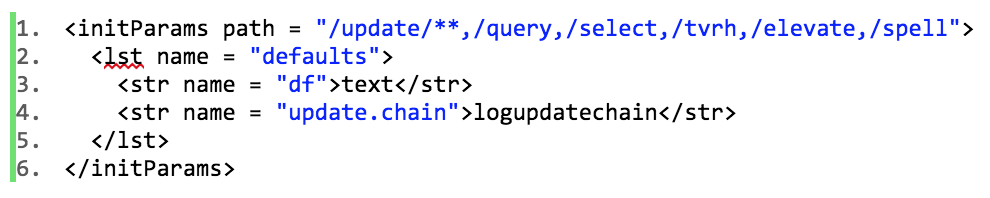

After defining the chain, we needed to modify the update request handler to call this chain whenever an update command is received. So, in the same file –

That’s it!

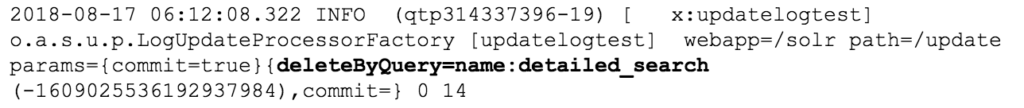

Logupdatechain let us to capture the following in the solr.log file –

Delete commands were coming in from the client’s CMS, and one of them was deleting all documents with the name detailed_search. It turned out that every document in that collection was named “detailed_search.” Mystery solved!

By matching the timestamp in the logs, we were able to find the events in the CMS that were triggering these queries. Thus ended the curious case of the missing data.