November 27, 2024

Jeff Dillon

|

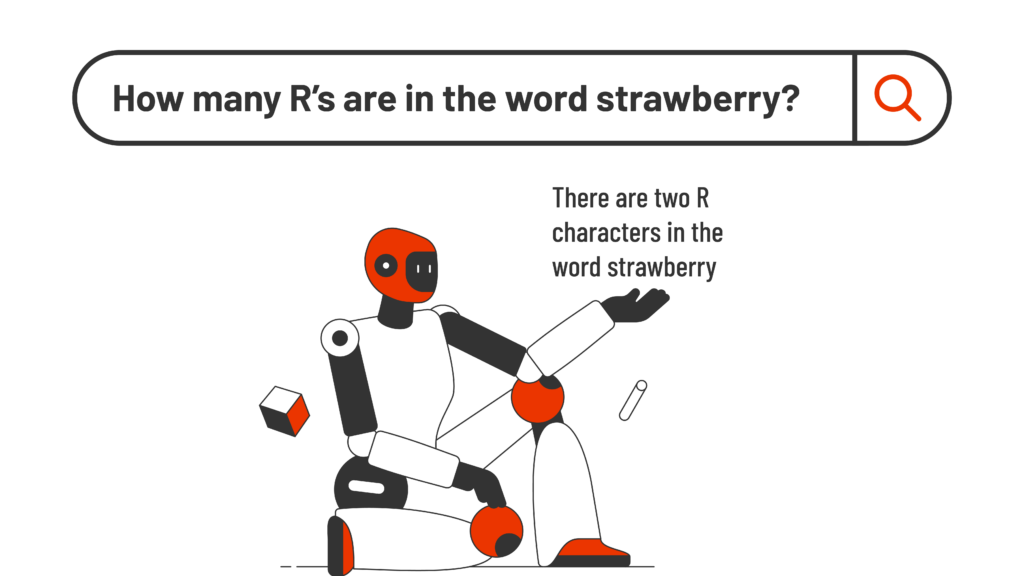

Content created at scale by artificial intelligence – also known as “AI slop” – is flooding the internet, denting user trust and information discoverability.

Digital Content Trust and Reliability are Dented by AI Slop

The internet has always been a mixed bag of quality. For every well-researched article or credible source, there are countless pieces of low-value content, misinformation or spam. Recently, a new type of content—”AI Slop”—has emerged, further muddying the waters and making it harder than ever to find accurate information online.

AI slop refers to the flood of low-quality media—ranging from writing to images—generated by artificial intelligence technologies. This problem has become so widespread that it’s starting to interfere with essential services, from social media updates to vital information during crises.

And the issue is only growing worse, especially in times of urgent need, like during recent natural disasters.

AI Slop During Hurricanes: A Crisis Exposed

In early October 2024, a series of powerful hurricanes hit the southeastern U.S., leaving behind destruction and a surge in online activity. People turned to the web in droves to get real-time updates about weather conditions, shelter locations and relief efforts. However, rather than being met with clear and helpful information, many found themselves wading through a sea of AI-generated junk articles, hastily assembled social media posts and low-quality images that added to the confusion.

For instance, Twitter and Facebook feeds were flooded with content that seemed factual on the surface but contained incorrect details or ambiguous warnings. Websites scraped together AI-generated summaries of weather data, leaving out crucial specifics or even confusing storm tracks.

The problem was compounded by the fact that AI slop can easily dominate search engine rankings and social media algorithms. As a result, when people searched for “Hurricane Shelters in Miami,” the top results were often unreliable AI-generated lists that misled users or wasted their time.

This surge of AI slop is not just a minor inconvenience. In life-or-death situations like natural disasters, access to accurate information is critical, and misinformation can have serious consequences. The hurricane crisis exposed how quickly AI-generated content can spiral out of control, drowning out the more accurate and relevant information that people need in a time of crisis.

The Problem Is Everywhere: The Pervasiveness of AI Slop

Unfortunately, AI slop isn’t just limited to times of crisis. It has infiltrated nearly every corner of the web. Whether you’re looking for product reviews, researching a topic for school or trying to follow the latest news, chances are you’ve encountered AI-generated content that offers little to no value. Social media platforms like Instagram and TikTok are also seeing a surge in AI-generated visuals, from fake celebrity endorsements to poorly crafted infographics that spread misinformation or sell low-quality products.

This low-grade content is not only prevalent on major platforms but also in more niche areas, including Higher Education. Colleges and universities are already navigating complex environments with vast amounts of institutional knowledge, varied audience needs and deep archives of academic content. When AI slop infiltrates these academic spaces, the consequences can be especially damaging.

The Impact on Higher Education: A Trust Crisis

Higher Education relies heavily on trust, both in the credibility of the institution and the content they provide. Colleges are inherently complex organizations that need to manage an enormous range of data in their website experience—from course catalogs and research papers to enrollment forms and student services. Now, AI slop is further complicating things. Misinformation, half-baked AI-generated resources and spammy web content are increasingly making their way into educational spaces, diluting the quality of information students, staff and prospective applicants find online.

For instance, a student researching admissions requirements for a particular university might land on a poorly written AI-generated article that provides outdated or inaccurate information. This not only leads to confusion but also erodes trust in the institution itself. If higher education institutions are perceived as having poor information or disorganized content, they risk losing their reputation as credible sources of knowledge. The addition of AI slop into this already complex system makes it nearly impossible to navigate with confidence.

The Need for a Practical Approach: Balancing AI with Core Search Principles

To combat this growing issue, we need to rethink how we approach information discovery on the web. Simply replacing human-curated content with AI-generated alternatives is not the answer. In fact, this could worsen the trust and relevancy problems we’re facing today. Instead, a practical approach is necessary—one where the core principles of search (trust, relevancy and transparency) are maintained while using AI as a tool for augmentation, not replacement.

Search is about trust. When you type a query into Google or another search engine, you expect the top results to be reliable and relevant. Yet AI-generated slop can hijack search rankings, pushing low-quality content to the forefront. The solution lies in better curating results, using AI to assist in parsing through vast datasets, but ensuring human oversight and editorial control remain central.

In higher education specifically, a practical approach means leveraging AI to streamline content creation and management but combining this with human-driven checks and balances. For example, AI can be used to help organize massive content libraries or aid in crafting basic web copy, but academic institutions need to ensure that human experts are reviewing and fine-tuning this content to maintain its accuracy and trustworthiness.

Rebuilding Trust in Information Discovery

The rise of AI slop has made it more difficult than ever to find high-quality, trustworthy information on the web. This issue affects everyone, from people searching for critical information during a hurricane to students researching college admissions requirements. In higher education, the stakes are particularly high, as trust in institutions is a cornerstone of their value.

To address this challenge, we must return to core principles of search and content discovery—trust, relevancy, and transparency—while using AI as an assistant, not a replacement. By adopting a practical approach that leverages AI’s strengths while maintaining human oversight, we can begin to clean up the mess that AI slop has created and rebuild trust in the information we find online.